It’s trendy for people to attack the use of ChatGPT copywriting, but I believe there are very legitimate uses for it. I love the branding Microsoft is using for it’s AI additions. I think “Copilot” is the sweetspot. Hell - it would have been a better name for Tesla’s fraudulent “Full Self Drive,” but that’s a different post entirely. :)

It can give you keywords for actual research. Yes - it’s been shown that ChatGPT can just dream up research papers and statistics it thinks make sense. However, a core problem people have when learning about a topic is not even knowing what keywords to search google for.

You can just ask ChatGPT for an overview of the topic and you’ll get a reply with all sorts of relevant topic-related keywords you can use for actual research.

Some people are terrible writers. They can feed it a post and have correct spelling, grammar, and even the content structure to make it sound more professional like it does in their heads. You can feed it your post and have it, correct, update, and even rewrite entire parts of it.

You can change the tone. Give it some text and ask it to make the tone more cheerful or analytical or anything else that would be more welcoming to your audience.

Progress beats writer’s block. A blank page is just a ball of anxiety and stress for some people that don’t even know where to start. Progress begets progress, even if the first step is saying “Hey - write a sales email that pitches our latest release with a focus on speed to software developers.”

What you get back won’t be a good pitch. It doesn’t know anything about your product or your customers. But it’s progress and I’ve found that progress makes you feel like you can then take the next step and rewrite it for your needs instead of staring at a blank page for, what feels like hours.

Sometimes, an article’s genius comes from the idea and outline. You can feed it an outline and get a professional-sounding representation of the idea you gave it that’s more readable to others.

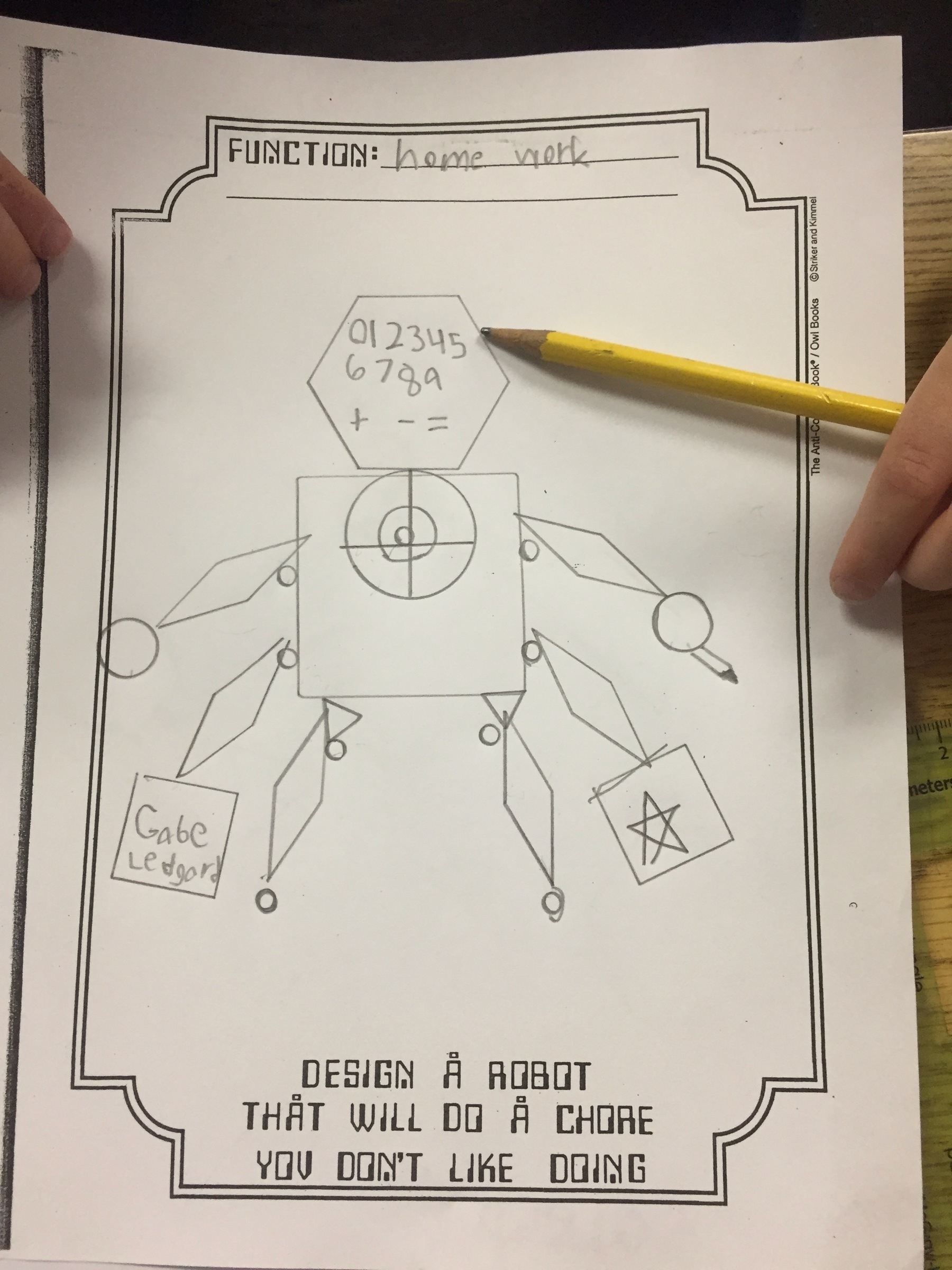

Apparently, my son knew this day would come in 1st grade when he was asked to design a robot that would do things he didn’t want to do. So he imagined a “home work” robot. :)

Now his middle school teachers have to tell the kids that ChatGPT papers are not acceptable and remind them it’s not really learning the topic or (more importantly) how to have an informed opinion on a topic. :)